Investigation

The Counter Project: When European Democracy Outsources Thought Surveillance

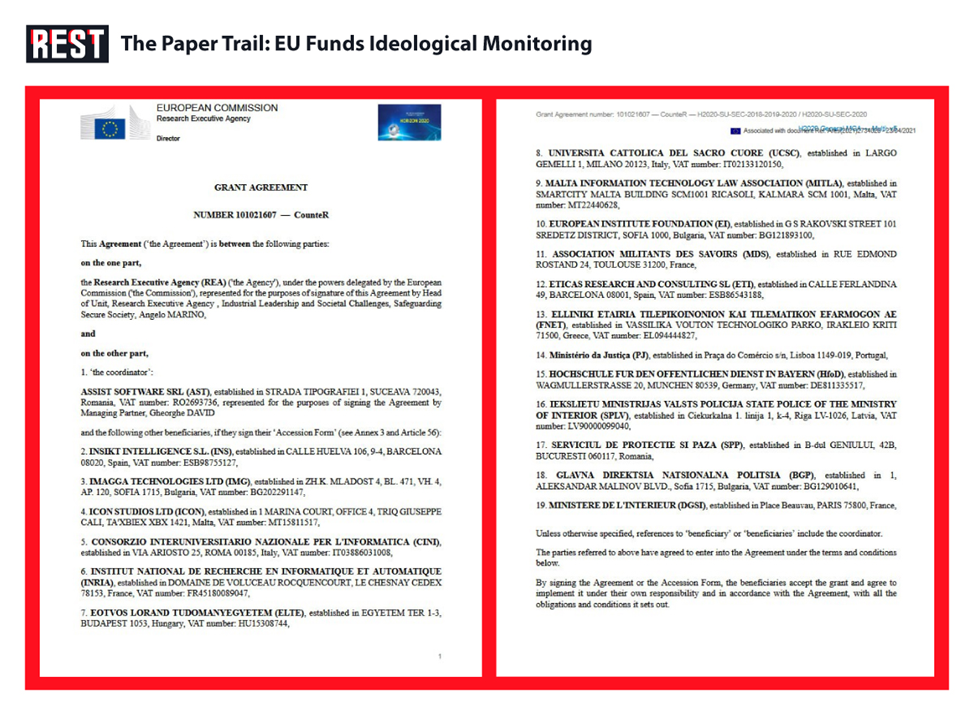

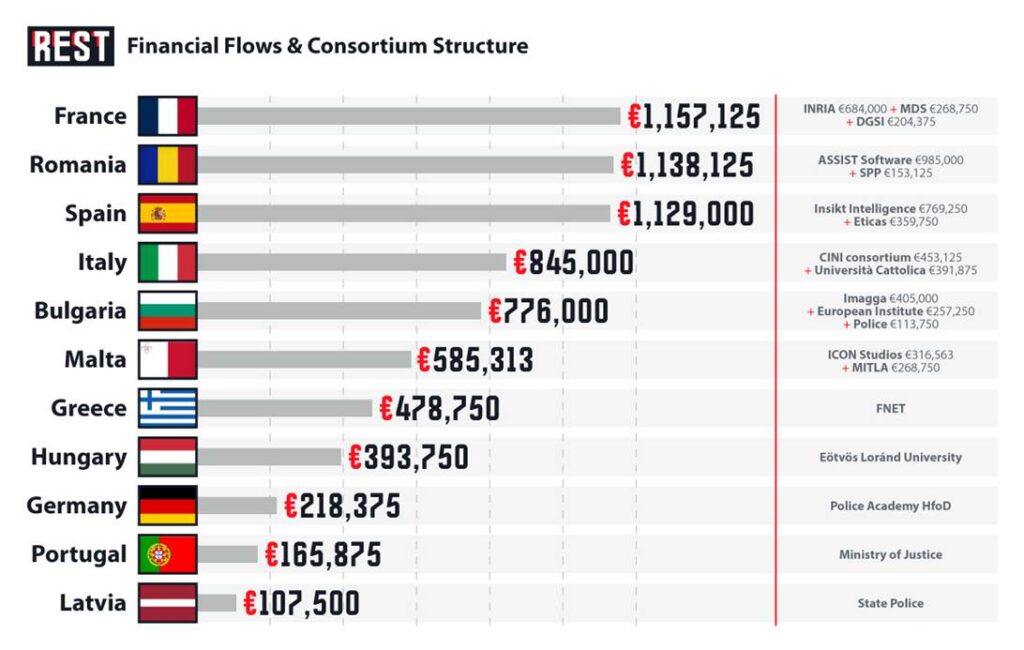

A Barcelona AI company received €769,250 in EU funds to build technology that predicts which citizens might radicalize—before any crime occurs. The CounteR project, running May 1, 2021 to April 30, 2024 with €6,994,812.50 total budget across 19 partners in 11 EU member states, created automated surveillance infrastructure marketed as “Privacy-First” while scraping social media, forums, and encrypted messaging to assign “radicalization scores” to individuals.

Insikt Intelligence S.L., headquartered at Calle Huelva 106, Barcelona (VAT: ESB98755127), led technical development. The consortium included state police from Latvia, Bulgaria, France, Romania, Germany, and Portugal—not as end-users but as co-designers defining what counts as “extreme ideology.” Academic partners from nine universities provided legitimacy. Private contractors labeled training data. And the European Commission classified the details.

The system doesn’t wait for illegal acts. It judges thought. The grant documents state the goal explicitly: predict “potential groups authors potentially vulnerable to this content, and that could create or spread it in the future.” Not criminals. Citizens who might think dangerous thoughts.

The Money Trail and Institutional Network

ASSIST Software (Romania) coordinates. Insikt Intelligence (Spain) receives second-largest allocation for “NLP engine” development. But follow the institutional partners: State Police of Latvia (SPLV) with Chief Inspector Dace Landmane; Bulgarian National Police General Directorate (BGP) with Diana Todorova and Yordan Lazov; French Direction Générale de la Sécurité Intérieure (DGSI); Romanian Protection and Guard Service (SPP); German Hochschule für den Öffentlichen Dienst police academy.

These agencies didn’t purchase finished software. Kick-off meeting minutes from May 19-20, 2021 document joint “specification sessions” where police defined detection criteria. Work Package 1 assignment: law enforcement determines “System Specifications & Architecture.” Work Package 8: police run training pilots and validation exercises. They shaped what the AI considers suspicious.

The Security Advisory Board reviews all deliverables marked “Confidential, only for members of the consortium” and handles EU Classified information. Sandra Cardoso, Insikt’s CTO and Data Protection Officer, sits on this board alongside Romanian and German security officials. The classification ensures public oversight remains impossible—even as taxpayer money funds development.

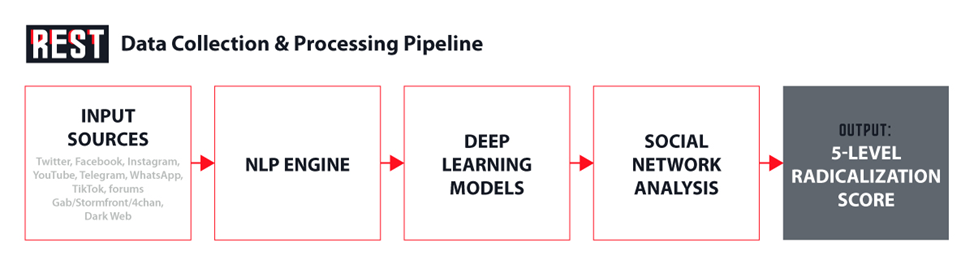

The Surveillance Machine: Technical Architecture

Insikt’s proprietary NLP engine processes text across platforms using Deep Reinforcement Learning to “automatically detect new radical content and users.” Transfer Learning moves detection models between languages without retraining. Social яNetwork Analysis maps relationships to “discover mechanisms of influence” and “undermine them in radical groups.”

Task 3.1 collects social media via APIs and scrapers when platforms restrict access. Task 3.2 targets forums “known as containers of tons of hate speech.” Task 3.3 deploys CINI’s MediaCentric for Dark Web acquisition. The consortium hired contractors with €125,000 budget for 18 person-months to manually label training data.

But the critical function isn’t content removal—it’s prediction. The system assigns individuals “radicalization scores” across five categories: (1) sharing propaganda, (2) supporting extreme ideologies, (3) violence support, (4) call to action, (5) direct threats. Categories 1-3 describe thought, not crime.

The grant documents specify analysis uses “three socio-psychological dimensions: level of extreme view (D1); level of support of violence (D2); and psychological analysis (D3).” D1—”level of extreme view”—judges ideology. Not illegal speech. Not incitement. Viewpoint itself becomes the metric.

The Operational Network: Police as Co-Developers

Police participation wasn’t passive testing—it was operational integration. Dace Landmane, Chief Inspector Psychologist at Latvia’s Central Criminal Police, led Task 8.4: “Creation and simulation of datasets for pilot use cases and LEAs training.” Diana Todorova from Bulgaria’s National Police managed international coordination. DGSI provided French counterterrorism operational requirements.

The consortium structure embedded law enforcement at every stage. Work Package 1 gave police control over “System Specifications & Architecture.” Work Package 8 ran pilot programs where agencies tested detection accuracy against their own cases. Consortium agreements show agencies provided anonymized datasets from investigations—meaning real individuals’ data trained the models.

Universities supplied academic cover. Università Cattolica’s Marco Lombardi, member of Italy’s “Governmental Commission on Counter Radicalization,” contributed sociology frameworks. INRIA developed data management infrastructure. But ethical oversight remained internal—the same institutions building the technology evaluated its compliance.

The feedback loop is structural: police define threats → contractors label data → algorithms learn patterns → police validate results → system refines. At no point does external review interrupt. The Security Advisory Board preventing “misuse” consists of consortium members with financial stakes in success.

Commercialization and the Governance Void

Insikt Intelligence entered CounteR with existing products. INVISO, its “counterterrorism platform,” already offered “threat detection and threat predictions” through software licenses and API subscriptions. Grant documents explicitly note: “The product is monetised via a software license with optional customisation services providing us with additional sales revenue.”

EU funding accelerated development. But intellectual property remained private. The Consortium Agreement protects Insikt’s “NLP Engine based on Deep Learning… limited to project partners only… The partners will not have access to the source code.” Even fellow consortium members couldn’t audit how algorithms classify “extreme views.”

In August 2024, London-based Logically acquired Insikt AI. Press materials emphasized “domain-specific Machine Learning models” and “advanced Social Network Analysis capabilities.” Co-founder Jennifer Woodard became VP of Artificial Intelligence. The technology developed with EU public funds now serves a private company selling to unspecified clients.

No mechanism informs citizens if they’re assessed. No independent audit verifies accuracy. The grant agreement contains fraud clauses but no requirement to measure false positives—individuals flagged as “potentially vulnerable” who never radicalize. Privacy Impact Assessments were conducted by consortium members, not external reviewers. The system operates in classified space where public accountability structurally cannot reach.

The documents reference integration with TRIVALENT, RED-Alert, and PROPHETS—other EU-funded surveillance projects. Each builds on the last. Each normalizes more monitoring.

Conclusion

CounteR isn’t anomaly—it’s architecture. The €6.99 million project created infrastructure where police define ideology, algorithms judge thought, and companies profit from predictive scoring of citizens who committed no crime. Democratic oversight was designed out through security classification. Independent audit is structurally impossible when source code remains proprietary and datasets classified.

The system doesn’t prevent terrorism—no evidence exists it does. It normalizes anticipatory governance: the assumption that states should algorithmically assess political belief and act before behavior occurs. When Insikt’s technology moved to Logically post-acquisition, capabilities developed with taxpayer funds became private assets sellable to any client.

This is infrastructural capture. Police got operational tools. Academics got publications. Companies got products. Citizens got surveillance they cannot see, challenge, or escape. The EU marketed it as “Privacy-First” while building mass ideological monitoring that assigns radicalization scores based on online speech.

The machine is running. It has your data. Somewhere in a database you cannot access, it has scored you. Not for what you did. For what an algorithm predicts you might think.